An excerpt from SEOpedia.org.

hidden links

Here they explain how and why it's beneficial to add sitelinks to your sites Google index.

Please feel free to read the

original article here.

-enjoy-

by

Cristian Mezei

I have been testing these

Sitelinks for quite some time now on several websites, from new to very old, from few to zounds of visitors.

What are Sitelinks ? They are a collection of links, automatically chosen by Google’s algorithm, to appear below the result of website, linking to main pages of your website. They are randomly chosen, although you can block any link from appearing. We will discuss more about Sitelinks in the Google Sitelinks FAQ section below.

Recently, some of my websites got Sitelinks whilst I tried different ways of reaching this milestone.

Some time ago, Vanessa Fox, from the Webmaster’s Central blog, wrote that the page from the Google Help describing these Sitelinks,

has been updated to reflect “information on how Google generates these links”. That’s crap to say the least, because

that Google Help page about Sitelinks, just states that they exist, are automatically generated and nothing more.

Although no official explanation

except this very basic page is offered by Google, I will try and write down a few of my own ideas, about when and how to get these Sitelinks for your website. Whilst I can’t promise you guys that ALL of the procedures below are involved in the process of making Sitelinks appear for your website, I can definitely guarantee you that SOME are

.

The above are true mainly because I have always (during months / years) tried 4 to 6 procedures at a time so I can’t really know which one had the most important contribution to the appearance of Sitelinks.

Procedures which may be involved in the appearance of Sitelinks:

- The number of links pointing to your website’s index page, using the several main keywords of your website as anchor. For example, for my blog, the two main keywords are “Cristian Mezei”, my name, and “SeoPedia” the name of my blog. Sitelinks appear only for a few main keywords, not for every keyword your website ranks for.

- The number of searches and SERP clicks for the main keywords I described above. you have to have a certain number of clicks for that keyword, to be able to reach a minimum requirement for the appearance of Sitelinks. This makes keywords which are not searched enough, to never have Sitelinks. Although some of my coleagues have mentioned that traffic has nothing to do or has everything to do with Sitelinks, I firmly believe that traffic for a particular keyword or keyphrase is very important.

- The number of indexed pages for the keyword you are targeting is also important. Please keep in mind that I am not discussing about the number of indexed pages for your website, but for the number of results shown in Google for that particular keyword.

- The age of the website is definitely an aspect when deciding how and when Sitelinks appear. As far as my tests go, and using a naturally and organically built website (no extensive or forced SEO), you can NOT have Sitelinks if the website is younger than 18-24 months, varying from case to case.

- You have to rank #1 for that particular keyword (and the ranking has to be stable) to be able to have any Sitelinks at all. This is very important and it has been proven true in 100% of times.

Misleading advices about Google Sitelinks

Whilst many other specialists and/or bloggers from the industry around the Internet have tried to help you figure out some ways to get Sitelinks, I will try to contradict them because some of those advices might not have a contribution to your effort, mainly because they are just too general and my experience says that they could be just loose-ends. Some of these advices might be:

- Making your website W3C valid. This is not a bad thing, but I highly doubt that it will make your website more prone to get Sitelinks. A lot of people have reported building their website with erratic code from 1992, and still having Sitelinks.

- Having links from powerful websites. I doubt that this aspect will help you in getting Sitelinks at all. Have a look at how I see inbound links having an effect, above (in the Procedures section).

- Having a lot of links (generally). I doubt that having tens of thousands of any links will move you up to the ladder, regarding Sitelinks. Whilst links will help I have explained above (in the Procedures section), specifically, in what way they will help.

- Some advices were really something like : “Make the website useful” or “Add Meta tags”. Whilst these are surely helpful for any website, they may have nothing to do with your website getting Sitelinks.

- Having a very well designed navigation menu. There were websites which had erratic or very well designed navigation menus and links within the website and still they all got Sitelinks.

- Pagerank has nothing to do with Sitelinks. There are PR7 and PR2 websites that got Sitelinks.

Although I don’t want to contradict (I just did that, but well .. ) my fellow colleagues, the above are my personal opinions and I wanted to stress them out. The reason I didn’t named names is obvious.

And as the title of my post says, below you’ll get the FAQ section, where I tried to answer most, if not all the questions that poped up in the past year, from all kinds of readers or people:

Google Sitelinks: The FAQ

Q: When are Sitelinks generated ? Is there some kind of Pagerank-alike update ?

A: I do want to stress out that about 4 of my websites got Sitelinks in exactly the same 1-2 day period, although the websites are very different one from another. One is 2 years old, another is 3,5. One has 1000 links, the other has 40.000 links. One is in the auto domain one is my blog. They are not linked in-between them. So all of this makes me think that there is some kind of general update of the Sitelinks, much like the updates for Pagerank, Inbound links or Google Images. Since

QOT got their Sitelinks on exactly the same day (6th Feb.) as many of my other websites, I am positive that there is a general Sitelinks update.

Q: I can’t see any Sitelinks generated within my Sitemaps account, although they appear in Google!

A: Sitelinks take anywhere from 2 weeks to 1 month to appear within your Sitemaps account, after they first appeared in the SERPs. Then you will have better control over some of the links.

Q: Why doesn’t my very important “Clients” page get in the Sitelinks section ?

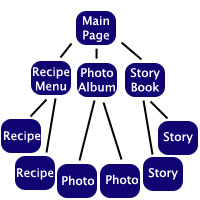

A: This may have to do with the fact that Sitelinks are usually generated from the first level links only. This means that if you have a page reachable by two clicks, it will never be included in the Sitelinks section. On rare occasions, deeplinks will be chosen, but I am not sure as to how these websites are chosen. Also make sure that you have pure HTML links. No Javascript or Flash.

Q: My website doesn’t have too much text links. Does this mean I’m doomed ?

A: Google will generate Sitelinks from image links too, as long as the image has the ALT tag. As other people have found too, it seems that the Sitelinks algorithm may chose a Sitelink even if you have no link towards it from your website, but in exchange, the page has a large number of links from other websites.

Q: What’s the point of having these stupid Sitelinks ?

A: One simple and huge reason: Trust and brand. Sitelinks have began to resemble trust lately in the eyes of the normal surfer (not to us SEMs, simply because we know there are heavily penalized websites who still got Sitelinks), so any website who has them is more prone to get clicks from the SERPs, from the search terms that show Sitelinks.

Q: What’s the minimum and maximum number of Sitelinks I’ll get ?

A: Minimum 2, maximum 8. Nevertheless I still can’t figure it out how Google assigns the number of Sitelinks to each website, except popularity. Most of my popular websites have 8. Most of my not-so-popular websites have 2 to 4.

Q: I don’t have a Google Sitemaps account. Will I still get Sitelinks ?

A: Definitely. The only drawback is that you will not have any control over them.

Q: How are the Sitelinks calculated ? Which links get in and which not ?

A: There are all kinds of opinions. After closely studying all my websites, I myself will still believe that they are chosen randomly. Not after traffic, not after inbound links. There’s

an interesting thread at SEW which you might want to read to get some speculation.

Q: I have a page in the Sitelinks section that doesn’t exist anymore. What should I do ?

A: It appears that the crawl delay of the Sitelinks is at least one month. So if you have a page that doesn’t exist anymore, try to 301 redirect it to the new one. The Sitelinks will then work ok.

Q: In my Sitemaps account I can remove Sitelinks if I don’t like them ?

A: Indeed you can. But please be careful when you do that, because if you remove a Sitelink it will not get replaced by another. This means that if you had 6 Sitelinks, and you block one because it’s not appropiate, you will be left with 5 Sitelinks in the Google SERPs. The 6th one will not be replaced with a new Sitelinks.

Vanessa Fox Nude forgotten all important post

The title is just a teaser for

Vanessa. She’s had that Nude thing like forever :)

For you guys who don’t know Vanessa, she’s been the women who lead the Google Webmasters Central team until she moved to

Zillow.

In this section I’ll analyze

the post she made on her blog right after she left Google. I’m actually amazed to see how I can’t any reactions to this post, since IMHO it’s the most important post about Sitelinks ever. More important than what Google has released and certainly more important then I or my colleagues speculate, simply because she’s been involved in the process of releasing the Sitelinks. Block quotes are quotes from Vanessa’s post:

For instance, if I do a Google search for [duke’s chower house seattle], am I looking for directions? Hours? A menu? Google doesn’t know, so they offer up several suggestions. (Quality aside: a link to the menu shows up in the sitelinks, but if you do a search for [duke’s chowder house seattle menu], that same link doesn’t show up on the first page. In fact, no pages from the Duke’s site show up.)

Basically, what Vanessa is telling us is that Sitelinks will NEVER appear for specific search terms. So that’s why we get Sitelinks for “Computers” or “Cristian Mezei” or “HP” or generally, company names as well as very general industry terms.

Google autogenerates the list of sitelinks at least in part from internal links from the home page. You’ll notice in the Duke’s example that one of the sitelinks is “five great locations” which also appears as primary navigation on the Duke’s home page. If you want to influence the sitelinks that appear for your site, make sure that your home page includes the links you want and that those links are easy to crawl (in HTML rather than Flash or Javascript, for instance) and have short anchor text that’ll fit in a sitelinks listing. They’ll also have to be relevant links. You can’t just put your Buy Cheap Viagra now link on the home page of your elementary school site and hope for the best.

In the above, Vanessa confirms me what I already told you in the FAQ section above. Sitelinks will be chosen from links present in the homepage only. I still firmly believe that some websites have Sitelinks from deeplinks within the website. How and when these websites are chosen, is still a mystery.

One more important thing we learn is that Sitelinks are chosen from relevant links in the homepage. Instead of repeating what Vanessa said about relevance, read the above quote.

There is a lot of other useful information inside Vanessa’s post, but since I already tackled those points in my previous sections, I left them aside.

Other opinions about Sitelinks

I asked a colleague of mine involved in SEM too, what he thinks about Sitemaps. I thought to put his answer here as well:

Marius Mailat

www.submitsuite.com

Cristian asked me about my opinion regarding Sitelinks. Breaking this question in small parts, here are my thoughts.

The sitelink option in the Google results are similar with the

siteinfo.xml provided for the Alexa toolbar, a simple option for a webmaster to provide most important direct links to his website structure. Google version of Siteinfo is different because you cannot specify WHICH link in your website is a Sitelink. You can only ask remove one link from the Sitelinks (Google Webmaster panel option).

Why are the Sitelinks appearing, when and under which algorithm? The algorithm used is totally automated and is taking in consideration the following criteria’s:

- Old powerful website.

- The sitelinks are pages which are coming on first position in SERPs.

- The sitelinks are most of the time associated with top results related words: “domain”, “domain download”, “domain demo” etc.

- The sitelinks are probably not influenced by PageRank.

Other very useful locations on the web for Sitelinks

Have fun with Sitelinks. If you have any questions, suggestions or rectifications, write a comment.

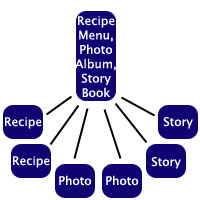

This system combines the second and third tiers, with a link to each category on the main page, and multiple items (e.g. stories) on one page. This is perfect for if you have lots of categories, but with not much content in each category. If I tried to set Pegaweb up like this, every tutorial would be on a single "Tutorial Page". It would be about 800k, and about ten miles high. :)

Top-Heavy System:

This system combines the second and third tiers, with a link to each category on the main page, and multiple items (e.g. stories) on one page. This is perfect for if you have lots of categories, but with not much content in each category. If I tried to set Pegaweb up like this, every tutorial would be on a single "Tutorial Page". It would be about 800k, and about ten miles high. :)

Top-Heavy System:

As long as this doesn't create mass clutter on your main page, this option has all the advantages of two and three tier systems.

The links to each item are on the main page, but are organised into tiers on that page. There will be a fair few links on the main page, but the organisation of the site will be very easy to understand.

This is the system I intend to use when I redesign this site. On the main page, there will be tiers, with links to each individual tutorial, article or resource etc. If you look back up the page, you'll find that this system is very similar to the two-tier system - in effect, it IS a two-tier system.

The moral of the story is - design your websites to be two-tiered. Choose the bottom-heavy or top-heavy systems as necessary. Unless your site is gigantic, it's possible to keep your site two-tiered, especially by using the bottom-heavy system and creating/removing categories, to balance the clutter between your main page and the rest of the site.

This is a reposting of a 2006 article by

As long as this doesn't create mass clutter on your main page, this option has all the advantages of two and three tier systems.

The links to each item are on the main page, but are organised into tiers on that page. There will be a fair few links on the main page, but the organisation of the site will be very easy to understand.

This is the system I intend to use when I redesign this site. On the main page, there will be tiers, with links to each individual tutorial, article or resource etc. If you look back up the page, you'll find that this system is very similar to the two-tier system - in effect, it IS a two-tier system.

The moral of the story is - design your websites to be two-tiered. Choose the bottom-heavy or top-heavy systems as necessary. Unless your site is gigantic, it's possible to keep your site two-tiered, especially by using the bottom-heavy system and creating/removing categories, to balance the clutter between your main page and the rest of the site.

This is a reposting of a 2006 article by  Every single color that you can think of can be used on the internet these days, which means that picking the right colors can be a mammoth task. Here is a swift summary of how some colors can provoke certain reactions.

Green is linked with nature, peace and jealousy. It is also a truly relaxing color and is perfect to use for a relaxing effect. The color white stirs up feelings of purity, simplicity, emptiness and innocence. If used as the main color of a site, it creates a clean and simple feel.

Blue is most commonly associated with business sites as it's a strong color that's associated with confidence, coldness, depression, water and peace. The color blue is linked with confidence, loyalty and coolness. It's the best-known color in the world and it's used by many companies to create a feeling of strength & confidence( plus, blue and orange seem to be the Naperville and Chicago favored colors).

Black is linked to feelings of mystery and refinement. For more detail go to: www.instant-video-streamer.com. An extremely popular color in design and photo web sites, it can be used effectively to contrast and liven up other colors. Green is linked to organic, nature and relaxation. The paler end of the green spectrum can be used to give a site a relaxed feel.

Grey can be associated with respect, humility, decay and boredom. It's used a lot to form shiny gradients in website design to give a professional, ordinary feel to a site. Orange is strongly associated with spirituality and healing. It's the color that symbolizes Buddhism and it has a calming energy about it. It's a bold color that is not as lively as yellow but not as deep as red.

Darker shades of purple can be very deep and luscious. It is linked to royalty, spirituality, arrogance and luxury. Lighter shades can represent romance and delicacy. It's a color that's not really used much on sites. Full of energy, vibrancy and stimulation, orange is a fantastic color to use in designing web-sites. It is used to bring youthfulness to a design.

Color's role is not just to make a website look good; it can encourage feelings & emotions from the audience. In the Chicago and Naperville areas, this can be especially important because of how emotionally driven local customers can be. Choosing colors that annoy the end user can have damaging effects on your website, while cleverly selecting can mean that the website meets user expectation.

Every single color that you can think of can be used on the internet these days, which means that picking the right colors can be a mammoth task. Here is a swift summary of how some colors can provoke certain reactions.

Green is linked with nature, peace and jealousy. It is also a truly relaxing color and is perfect to use for a relaxing effect. The color white stirs up feelings of purity, simplicity, emptiness and innocence. If used as the main color of a site, it creates a clean and simple feel.

Blue is most commonly associated with business sites as it's a strong color that's associated with confidence, coldness, depression, water and peace. The color blue is linked with confidence, loyalty and coolness. It's the best-known color in the world and it's used by many companies to create a feeling of strength & confidence( plus, blue and orange seem to be the Naperville and Chicago favored colors).

Black is linked to feelings of mystery and refinement. For more detail go to: www.instant-video-streamer.com. An extremely popular color in design and photo web sites, it can be used effectively to contrast and liven up other colors. Green is linked to organic, nature and relaxation. The paler end of the green spectrum can be used to give a site a relaxed feel.

Grey can be associated with respect, humility, decay and boredom. It's used a lot to form shiny gradients in website design to give a professional, ordinary feel to a site. Orange is strongly associated with spirituality and healing. It's the color that symbolizes Buddhism and it has a calming energy about it. It's a bold color that is not as lively as yellow but not as deep as red.

Darker shades of purple can be very deep and luscious. It is linked to royalty, spirituality, arrogance and luxury. Lighter shades can represent romance and delicacy. It's a color that's not really used much on sites. Full of energy, vibrancy and stimulation, orange is a fantastic color to use in designing web-sites. It is used to bring youthfulness to a design.

Color's role is not just to make a website look good; it can encourage feelings & emotions from the audience. In the Chicago and Naperville areas, this can be especially important because of how emotionally driven local customers can be. Choosing colors that annoy the end user can have damaging effects on your website, while cleverly selecting can mean that the website meets user expectation. Some time ago, Vanessa Fox, from the Webmaster’s Central blog, wrote that the page from the Google Help describing these Sitelinks,

Some time ago, Vanessa Fox, from the Webmaster’s Central blog, wrote that the page from the Google Help describing these Sitelinks,  So now that you know this “method” of SEO is archaic, ineffective and sloppy, how do you go about fixing your site?

Whether or not you should “fix something that isn’t broken” is something only you (if you have the knowledge), your in-house SEO or outside SEO firm can really answer as it really does need to be looked at on a case by case basis. There IS POTENTIAL RISK INVOLVED with changing URL structure that should be assessed. That said, I’ve had a lot of success with site structure migrations and most times, will choose to slowly migrate the site to a new, sensible URL structure.

Some tips for site structure migration

If you do choose to change your URL structure, you’ll find some tips based on my experience with my previous migrations below:

So now that you know this “method” of SEO is archaic, ineffective and sloppy, how do you go about fixing your site?

Whether or not you should “fix something that isn’t broken” is something only you (if you have the knowledge), your in-house SEO or outside SEO firm can really answer as it really does need to be looked at on a case by case basis. There IS POTENTIAL RISK INVOLVED with changing URL structure that should be assessed. That said, I’ve had a lot of success with site structure migrations and most times, will choose to slowly migrate the site to a new, sensible URL structure.

Some tips for site structure migration

If you do choose to change your URL structure, you’ll find some tips based on my experience with my previous migrations below:

You May Wish To.

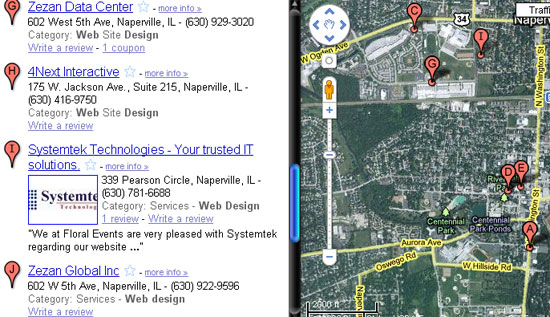

You May Wish To. Please feel free to verify the results. We were listed #1 this morning. As you can see, there is no login to Google, so nothing would be effecting the indexing based on preferences.

This is further proof that large cost, local SEO groups are charging more for their services than the average business should be paying. This is in no small part a benefit of the mapping techniques discussed

Please feel free to verify the results. We were listed #1 this morning. As you can see, there is no login to Google, so nothing would be effecting the indexing based on preferences.

This is further proof that large cost, local SEO groups are charging more for their services than the average business should be paying. This is in no small part a benefit of the mapping techniques discussed

So being able to figure out exactly how to get that 100% guarantee that your site will end up on top of the search results is basically the Holy Grail of SEO. There are people out there that dedicate their careers, even their life to figuring this out. Because they know that if they did they would become overnight millionaires. It would be like cracking the code that nobody else in the world could do. Some people may get parts of it figured out, but no one has been able to give that 100% guarantee.

The problem is a lack of consistency. If you asked anyone who has a website how they were going to get on top of the Google search results, 99.9% would agree they would need the highest PageRank possible. Now it is no doubt that a high PageRank is important, but is it a guarantee that your site is going to make it on the first page of the results. A lot of people would say yes, and when Google announces that they are going to update the PageRanks most website owners are on pins and needles wondering if they are going to move up or down. But what if it didn't really matter?

There is an internet marketer (probably one of the last truly honest ones out there) by the name of Jonathan Leger who has spent a great deal of time testing out the theories that the SEO experts or "gurus" have been selling to website owners for years. One of which is the "PageRank theory". He ran a very intensive case study and came up with some interesting results. He found that up to 1/3 of the time websites with lower PageRank actually ranked higher in the search results than those with higher PageRank. Some time a great deal higher. Consider a website with a PageRank of 4 ending up 5 steps above a website with a PageRank of 7 or 8. It actually happens!!

But the problem is that this isn't the only theory that SEO experts have been preaching that doesn't stand up under Mr. Leger's study. He has found 7 (yes, 7!) different SEO theories that don't always hold true in the real world. That's why if you are at all interested in getting your website to the top of the search results then you have to read Jon's report. And of course, he surprises us all again by the fact that he isn't charging a single dime for it! All you have to do is go to his website below and get ready to download the free report. This whole thing could end up making some people very angry, but I have a feeling that the rest of us will be very happy!

So being able to figure out exactly how to get that 100% guarantee that your site will end up on top of the search results is basically the Holy Grail of SEO. There are people out there that dedicate their careers, even their life to figuring this out. Because they know that if they did they would become overnight millionaires. It would be like cracking the code that nobody else in the world could do. Some people may get parts of it figured out, but no one has been able to give that 100% guarantee.

The problem is a lack of consistency. If you asked anyone who has a website how they were going to get on top of the Google search results, 99.9% would agree they would need the highest PageRank possible. Now it is no doubt that a high PageRank is important, but is it a guarantee that your site is going to make it on the first page of the results. A lot of people would say yes, and when Google announces that they are going to update the PageRanks most website owners are on pins and needles wondering if they are going to move up or down. But what if it didn't really matter?

There is an internet marketer (probably one of the last truly honest ones out there) by the name of Jonathan Leger who has spent a great deal of time testing out the theories that the SEO experts or "gurus" have been selling to website owners for years. One of which is the "PageRank theory". He ran a very intensive case study and came up with some interesting results. He found that up to 1/3 of the time websites with lower PageRank actually ranked higher in the search results than those with higher PageRank. Some time a great deal higher. Consider a website with a PageRank of 4 ending up 5 steps above a website with a PageRank of 7 or 8. It actually happens!!

But the problem is that this isn't the only theory that SEO experts have been preaching that doesn't stand up under Mr. Leger's study. He has found 7 (yes, 7!) different SEO theories that don't always hold true in the real world. That's why if you are at all interested in getting your website to the top of the search results then you have to read Jon's report. And of course, he surprises us all again by the fact that he isn't charging a single dime for it! All you have to do is go to his website below and get ready to download the free report. This whole thing could end up making some people very angry, but I have a feeling that the rest of us will be very happy!

While some may say this method is dirty, tainted, or just underweight marketing, it's being used by business everywhere to propel their site recognition and visibility. The point is not to consider any form of media as to small to be useful. Whatever addresses are applicable to your business or site should always be listed in Google, Yahoo, and Mapquest Map sites. Unless there are privacy concerns, this is an easy method at getting multiple links and search traffic to your site. If there are privacy concerns, it is quite apparent that a lot of businesses are using addresses that are not their own, not remotely related to the company, and in a few cases, don't even exist. Like I said, I don't take a stance either way on the address issue. Whatever address you choose to use is your own business and will be pertinent to your site needs. The pint is to consider adding site traffic through any means possible and to elevate your position in the search engines. If you find that having multiple addresses within search maps allows for extra exposure, it might be worth considering.

While some may say this method is dirty, tainted, or just underweight marketing, it's being used by business everywhere to propel their site recognition and visibility. The point is not to consider any form of media as to small to be useful. Whatever addresses are applicable to your business or site should always be listed in Google, Yahoo, and Mapquest Map sites. Unless there are privacy concerns, this is an easy method at getting multiple links and search traffic to your site. If there are privacy concerns, it is quite apparent that a lot of businesses are using addresses that are not their own, not remotely related to the company, and in a few cases, don't even exist. Like I said, I don't take a stance either way on the address issue. Whatever address you choose to use is your own business and will be pertinent to your site needs. The pint is to consider adding site traffic through any means possible and to elevate your position in the search engines. If you find that having multiple addresses within search maps allows for extra exposure, it might be worth considering. You'll see lots of advice about "SEO" or Search Engine Optimizing, or "using keywords." Much of that advice can actually hurt your Google rankings, especially "keyword stuffing," or deliberately over-using the terms you think will bring your page to the top ranks in a search. Google and other search engines are constantly changing the way they calculate search rankings, and they're getting smarter about figuring out ways of rewarding quality sites. That means that the best way of making sure your pages and posts have top search engine rankings and appear in the first few results when someone does a search is to write well.

Really. Good writing trumps all the deliberate use of SEO keyword techniques.

But what, you ask, is good writing? I'm glad you asked, Grasshopper.

To begin with, good writing means being as clear as you can about what exactly you're writing about.

Use a very clear and specific title for your page or post. Remember that a lot of readers will only see your title in their RSS feed, and the title has to be clear enough that they click to read the post. You want to be accurate, descriptive—and brief.

Don't use the same subject phrase over and over—that's called keyword stuffing, and it's not only repetitive and boring, it's down right obnoxious. Overusing a keyword or phrase can suggest to a search engine that you're a spam site, and your ranking will suffer accordingly.

Do use the most appropriate descriptive and specific language. Being specific means that your reader doesn't have to guess what you mean or what you're talking about. SPecificity makes your work easy to find.

Remember that just because you call cats kitties, doesn't mean that your readers will; so think about synonyms, and likely search phrases. What will help a reader find your post? You might choose to use cats, kitties and felines—and you might also deliberately choose to avoid using pussies, since you don't want to attract porn spam.

Google offers some tools to help you write good posts. One of them is the

You'll see lots of advice about "SEO" or Search Engine Optimizing, or "using keywords." Much of that advice can actually hurt your Google rankings, especially "keyword stuffing," or deliberately over-using the terms you think will bring your page to the top ranks in a search. Google and other search engines are constantly changing the way they calculate search rankings, and they're getting smarter about figuring out ways of rewarding quality sites. That means that the best way of making sure your pages and posts have top search engine rankings and appear in the first few results when someone does a search is to write well.

Really. Good writing trumps all the deliberate use of SEO keyword techniques.

But what, you ask, is good writing? I'm glad you asked, Grasshopper.

To begin with, good writing means being as clear as you can about what exactly you're writing about.

Use a very clear and specific title for your page or post. Remember that a lot of readers will only see your title in their RSS feed, and the title has to be clear enough that they click to read the post. You want to be accurate, descriptive—and brief.

Don't use the same subject phrase over and over—that's called keyword stuffing, and it's not only repetitive and boring, it's down right obnoxious. Overusing a keyword or phrase can suggest to a search engine that you're a spam site, and your ranking will suffer accordingly.

Do use the most appropriate descriptive and specific language. Being specific means that your reader doesn't have to guess what you mean or what you're talking about. SPecificity makes your work easy to find.

Remember that just because you call cats kitties, doesn't mean that your readers will; so think about synonyms, and likely search phrases. What will help a reader find your post? You might choose to use cats, kitties and felines—and you might also deliberately choose to avoid using pussies, since you don't want to attract porn spam.

Google offers some tools to help you write good posts. One of them is the  Visualize The Approach

Think of throwing rocks into a small pond or lake. The ripples will swell outward in various patterns and expanses. They become even smaller as they travel outward. As they encounter obstacles and other ripples, their direction shifts in many more obscure ways. This is the key visualization to increase site traffic... In reverse, of course.

Visualize The Approach

Think of throwing rocks into a small pond or lake. The ripples will swell outward in various patterns and expanses. They become even smaller as they travel outward. As they encounter obstacles and other ripples, their direction shifts in many more obscure ways. This is the key visualization to increase site traffic... In reverse, of course. Keep in mind, all waves have roughly the same amplitude. With this thought, imagine pushing traffic in small, obscure waves that build in size while traveling back to your website. This requires a lot of work and interaction with other sites as well. Using the blogosphere, utilizing Press Releases, Twitter, Facebook, Myspace, Ning, Digg, Del.icio.us, LinkedIn, ect.... The list could go on for some time. Now these are the at the tops of most every "At Home SEO Expert", but what of the lesser known sites? What about blogs that are only minimally related to your goal. They "ARE" all fair game, regardless of what some experts may say. The point is to not just think of social networking, not just think of bookmarks, not to just think of the quick response websites

Keep in mind, all waves have roughly the same amplitude. With this thought, imagine pushing traffic in small, obscure waves that build in size while traveling back to your website. This requires a lot of work and interaction with other sites as well. Using the blogosphere, utilizing Press Releases, Twitter, Facebook, Myspace, Ning, Digg, Del.icio.us, LinkedIn, ect.... The list could go on for some time. Now these are the at the tops of most every "At Home SEO Expert", but what of the lesser known sites? What about blogs that are only minimally related to your goal. They "ARE" all fair game, regardless of what some experts may say. The point is to not just think of social networking, not just think of bookmarks, not to just think of the quick response websites