Posted by bhendrickson

We recently posted some correlation statistics on our blog. We believe these statistics are interesting and provide insight into the ways search engines work (a core principle of our mission here at SEOmoz). As we will continue to make similar statistics available, I'd like to discuss why correlations are interesting, refute the math behind recent criticisms, and reflect on how exciting it is to engage in mathematical discussions where critiques can be definitively rebutted.

I've been around SEOmoz for a little while now, but I don't post a lot. So, as a quick reminder, I designed and built the prototype for the SEOmoz's web index, as well as wrote a large portion of the back-end code for the project. We shipped the index with billions of pages nine months after I started on the prototype, and we have continued to improve it since. Recently I made the machine learning models that are used to make Page Authority and Domain Authority, and am working on some fairly exciting stuff that has not yet shipped. As I'm an engineer and not a regular blogger, I'll ask for a bit of empathy for my post - it's a bit technical, but I've tried to make it as accessible as possible.

Why does Correlation Matter?

Correlation helps us find causation by measuring how much variables change together. Correlation does not imply causation; variables can be changing together for reasons other than one affecting the other. However, if two variables are correlated and neither is affecting the other, we can conclude that there must be a third variable that is affecting both. This variable is known as a confounding variable. When we see correlations, we do learn that a cause exists -- it might just be a confounding variable that we have yet to figure out.

How can we make use of correlation data? Let's consider a non-SEO example.

There is evidence that women who occasionally drink alcohol during pregnancy give birth to smarter children with better social skills than women who abstain. The correlation is clear, but the causation is not. If it is causation between the variables, then light drinking will make the child smarter. If it is a confounding variable, light drinking could have no effect or even make the child slightly less intelligent (which is suggested by extrapolating the data that heavy drinking during pregnancy makes children considerably less intelligent).

Although these correlations are interesting, they are not black-and-white proof that behaviors need to change. One needs to consider which explanations are more plausible: the causal ones or the confounding variable ones. To keep the analogy simple, let's suppose there were only two likely explanation - one causal and one confounding. The causal explanation is that alcohol makes a mother less stressed, which helps the unborn baby. The confounding variable explanation is that women with more relaxed personalities are more likely to drink during pregnancy and less likely to negatively impact their child's intelligence with stress. Given this, I probably would be more likely to drink during pregnancy because of the correlation evidence, but there is an even bigger take-away: both likely explanations damn stress. So, because of the correlation evidence about drinking, I would work hard to avoid stressful circumstances. *

Was the analogy clear? I am suggesting that as SEOs we approach correlation statistics like pregnant women considering drinking - cautiously, but without too much stress.

* Even though I am a talented programmer and work in the SEO industry, do not take medical advice from me, and note that I construed the likely explanations for the sake of simplicity :-)

Some notes on data and methodology

We have two goals when selecting a methodology to analyze SERPs:

- Choose measurements that will communicate the most meaningful data

- Use techniques that can be easily understood and reproduced by others

These goals sometimes conflict, but we generally choose the most common method still consistent with our problem. Here is a quick rundown of the major options we had, and how we decided between them for our most recent results:

Machine Learning Models vs. Correlation Data: Machine learning can model and account for complex variable interactions. In the past, we have reported derivatives of our machine learning models. However, these results are difficult to create, they are difficult to understand, and they are difficult to verify. Instead we decided to compute simple correlation statistics.

Pearson's Correlation vs. Spearman's Correlation: The most common measure of correlation is Pearson's Correlation, although it only measures linear correlation. This limitation is important: we have no reason to think interesting correlations to ranking will all be linear. Instead we choose to use Spearman's correlation. Spearman's correlation is still pretty common, and it does a reasonable job of measuring any monotonic correlation.

Here is a monotonic example: The count of how many of my coworkers have eaten lunch for the day is perfectly monotonically correlated with the time of day. It is not a straight line and so it isn't linear correlation, but it is never decreasing, so it is monotonic correlation.

Here is a linear example: assuming I read at a constant rate, the amount of pages I can read is linearly correlated with the length of time I spend reading.

Mean Correlation Coefficient vs. Pooled Correlation Coefficient: We collected data for 11,000+ queries. For each query, we can measure the correlation of ranking position with a particular metric by computing a correlation coefficient. However, we don't want to report 11,000+ correlation coefficients; we want to report a single number that reflects how correlated the data was across our dataset, and we want to show how statistically significant that number is. There are two techniques commonly used to do this:

- Compute the mean of the correlation coefficients. To show statistical significance, we can report the standard error of the mean.

- Pool the results from all SERPs and compute a global correlation coefficient. To show statistical significance, we can compute standard error through a technique known as bootstrapping.

The mean correlation coefficient and the pooled correlation coefficient would both be meaningful statistics to report. However, the bootstrapping needed to show the standard error of the pooled correlation coefficient is less common than using the standard error of the mean. So we went with #1.

Fisher Transform Vs No Fisher Transform: When averaging a set of correlation coefficients, instead of computing the mean of the correlation coefficients, sometimes one computes the mean of the fisher transforms of the coefficients (before applying the inverse fisher transform). This would not be appropriate for our problem because:

- It will likely fail. The Fisher transform includes a division by the coefficient minus one, and so explodes when an individual coefficient is near one and outright fails when there is a one. Because we are computing hundreds of thousands of coefficients each with small sample sizes to average over, it is quite likely the Fisher transform will fail for our problem. (Of course, we have a large sample of these coefficients to average over, so our end standard error is not large)

- It is unnecessary for two reasons. First, the advantage of the transform is that it can make the expect average closer to the expected coefficient. We do nothing that assumes this property. Second, as mean coefficients are near to zero, this property holds without the transform, and our coefficients were not large.

Rebuttals To Recent Criticisms

Two bloggers, Dr. E. Garcia and Ted Dzubia, have published criticisms of our statistics.

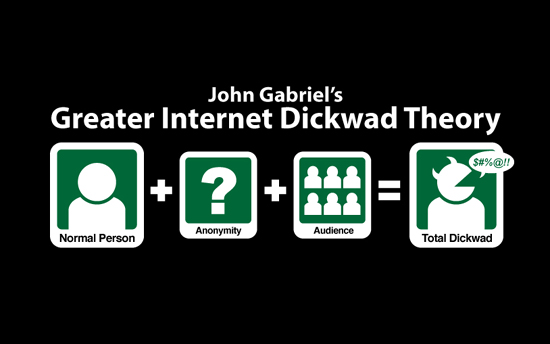

Eight months before his current post, Ted Dzubia wrote an enjoyable and jaunty post lamenting that criticism of SEO every six to eight months was an easy way to generate controversy, noting "it's been a solid eight months, and somebody kicked the hornet's nest. Is SEO good or evil? It's good. It's great. I <3 SEO." Furthermore, his twitter feed makes it clear he sometimes trolls for fun. To wit: "Mongrel 2 under the Affero GPL. TROLLED HARD," "Hacker News troll successful," and "mailing lists for different NoSQL servers are ripe for severe trolling." So it is likely we've fallen for trolling...

I am going to respond to both of their posts anyway because they have received a fair amount of attention, and because both posts seek to undermine the credibility of the wider SEO industry. SEOmoz works hard to raise the standards of the SEO industry, and protect it from unfair criticisms (like Garcia's claim that "those conferences are full of speakers promoting a lot of non-sense and SEO myths/hearsays/own crappy ideas" or Dzubia's claim that, besides our statistics, "everything else in the field is either anecdotal hocus-pocus or a decree from Matt Cutts"). We also plan to create more correlation studies (and more sophisticated analyses using my aforementioned ranking models) and thus want to ensure that those who are employing this research data can feel confident in the methodology employed.

Search engine marketing conferences, like SMX, OMS and SES, are essential to the vitality of our industry. They are an opportunity for new SEO consultants to learn, and for experienced SEOs to compare notes. It can be hard to argue against such subjective and unfair criticism of our industry, but we can definitively rebut their math.

To that end, here are rebuttals for the four major mathematical criticisms made by Dr. E. Garcia, and the two made by Dzubia.

1) Rebuttal to Claim That Mean Correlation Coefficients Are Uncomputable

For our charts, we compute a mean correlation coefficient. The claim is that such a value is impossible to compute.

Dr. E. Garcia : "Evidently Ben and Rand don’t understand statistics at all. Correlation coefficients are not additive. So you cannot compute a mean correlation coefficient, nor you can use such 'average' to compute a standard deviation of correlation coefficients."

There are two issues with this claim: a) peer reviewed papers frequently published mean correlation coefficients; b) additivity is relevant for determining if two different meanings of the word "average" will have the same value, not if the mean will be uncomputable. Let's consider each issue in more detail.

a) Peer Reviewed Articles Frequently Compute A Mean Correlation Coefficient

E. Garcia is claiming something is uncomputable that researchers frequently compute and include in peer reviewed articles. Here are three significant papers where the researchers compute a mean correlation coefficient:

"The weighted mean correlation coefficient between fitness and genetic diversity for the 34 data sets was moderate, with a mean of 0.432 +/- 0.0577" (Macquare University - "Correlation between Fitness and Genetic Diversity", Reed, Franklin; Conversation Biology; 2003)

"We observed a progressive change of the mean correlation coefficient over a period of several months as a consequence of the exposure to a viscous force field during each session. The mean correlation coefficient computed during the force-field epochs progressively..." (MIT - F. Gandolfo, et al; "Cortical correlates of learning in monkeys adapting to a new dynamical environment," 2000)

"For the 100 pairs of MT neurons, the mean correlation coefficient was 0.12, a value significantly greater than zero" (Stanford - E Zohary, et al; "Correlated neuronal discharge rate and its implications for psychophysical performance", 1994)

SEOmoz is in a camp with reviewers from the journal Nature, as well as researchers from MIT, Stanford and authors of 2,400 other academic papers that use the mean correlation coefficient. Our camp is being attacked by Dr. E. Garcia's, who argues our camp doesn't "understand statistics at all." It is fine to take positions outside of the scientific mainstream, although when Dr. E. Garcia takes such a position he should offer more support for it. Given how commonly Dr. E. Garcia uses the pejorative "quack," I suspect he does not mean to take positions this far outside of academic consensus.

b) Additivity Relevant For Determining If Different Meanings Of "Average" Are The Same, Not If Mean Is Computable

Although "mean" is quite precise, "average" is less precise. By "average" one might intend the words "mean", "mode", "median," or something else. One of these other things that it could be used as meaning is 'the value of a function on the union of the inputs'. This last definition of average might seem odd, but it is sometimes used. Consider if someone asked "a car travels 1 mile at 20mph, and 1 mile at 40mph, what was the average mph for the entire trip?" The answer they are looking for is not 30mph, which is mean of the two measurements, but ~26mph, which is the mph for the whole 2 mile trip. In this case, the mean of the measurements is different from the colloquial average which is the function for computing mph applied to the union of the inputs (the whole two miles).

This may be what has confused Dr. E. Garcia. Elsewhere he cites Statsweb when repeating this claim. Which makes the point that this other "average" is different than the mean. Additivity is useful in determining if these averages will be different. But even if another interpretation of average is valid for a problem, and even if that other average is different than the mean, it neither makes the mean uncomputable nor meaningless.

2) Rebuttal to Claim About Standard Error of the Mean vs Standard Error of a Correlation Coefficent

Although he has stated unequivocally that one cannot compute a mean correlation coefficient, Garcia is quite opinionated on how we ought to have computed standard error for it. To wit:

E. Garcia: "Evidently, you don’t know how to calculate the standard error of a correlation coefficient... the standard error of the mean and the standard error of a correlation coefficient are two different things. Moreover, the standard deviation of the mean is not used to calculate the standard error of a correlation coefficient or to compare correlation coefficients or their statistical significance."

He repeats this claim even after making the point above about mean correlation coefficients, so he clearly is aware the correlation coefficients being discussed are mean coefficients and not coefficients computed after pooling data points. So let's be clear on exactly what his claim implies. We have some measured correlation coefficients, and we take the mean of these measured coefficients. The claim is that we should have used the same formula for standard error of the mean of these measured coefficients that we would have used for only one. Garcia's claim is incorrect. One would use the formula for the standard error of the mean.

The formula for the mean, and for the standard error of the mean, apply even if there is a way to separately compute standard error for one of the observations the mean was over. If we were computing the mean of the count of apples in barrels, lifespans of people in the 19th century, or correlation coefficients for different SERPs, the same formula for the standard error of this mean applies. Even if we have other ways to measure the standard error of the measurements we are taking the mean over - for instance, our measure of lifespans might only be accurate to the day of death and so could be off by 24 hours - we cannot use how we would compute standard error for an observation to compute standard error of the mean of those observations.

A smaller but related objection is over language. He objects to my using the standard deviations in reference to a count of how far away a point is from a mean in units of the mean's standard error. As wikipedia notes, the "standard error of the mean (i.e., of using the sample mean as a method of estimating the population mean) is the standard deviation of those sample means" So the count of how many lengths of standard error a number is away from the estimate of a mean, according to Wikipedia, would be standard deviations of our mean estimate. Beyond it being technically correct, it also fit the context, which was the accuracy of the sample mean.

3) Rebuttal to Claim That Non-Linearity Is Not A Valid Reason To Use Spearman's Correlation

I wrote "Pearson’s correlation is only good at measuring linear correlation, and many of the values we are looking at are not. If something is well exponentially correlated (like link counts generally are), we don’t want to score them unfairly lower.”

E. Garcia responded by citing a source whom he cited as "exactly right": "Rand your (or Ben’s) reasoning for using Spearman correlation instead of Pearson is wrong. The difference between two correlations is not that one describes linear and the other exponential correlation, it is that they differ in the type of variables that they use. Both Spearman and Pearson are trying to find whether two variables correlate through a monotone function, the difference is that they treat different type of variables - Pearson deals with non-ranked or continuous variables while Spearman deals with ranked data."

E. Garcia's source, and by extension E. Garcia, are incorrect. A desire to measure non-linear correlation, such as exponential correlations, is a valid reason to use Spearman's over Pearson's. The point that "Pearson deals with non-ranked or continuous variables while Spearman deals with ranked data" is true in that to compute Spearman's correlation, one can convert continuous variables to ranked indices and then apply Pearson's. However, the original variables do not need to originally be ranked indices. If they did, Spearman's would always produce the same results as Pearson's and there would be no purpose for it.

My point that E. Garcia objects to, that Pearson's only measure's linear correlation while Spearman's can measure other kinds of correlation such as exponential correlations, was entirely correct. We can quickly quote Wikipedia to show that Spearman's measures any monotonic correlation (including exponential) while Pearson's only measures linear correlation.

The Wikipedia article on Pearson's Correlation starts by noting that it is a "measure of the correlation (linear dependence) between two variables".

The Wikpedia article on Spearman's Correlation starts with an example in the upper right showing that a "Spearman correlation of 1 results when the two variables being compared are monotonically related, even if their relationship is not linear. In contrast, this does not give a perfect Pearson correlation."

E. Garcia's position neither makes sense nor agrees with the literature. I would go into the math in more detail, or quote more authoritative sources, but I'm pretty sure Garcia now knows he is wrong. After E. Garcia made his incorrect claim about the difference between Spearman's correlation and Pearson's correlation, and after I corrected E. Garcia's source (which was in a comment on our blog), E. Garcia has stated the difference between Spearman's and Pearson's correctly. However, we want to make sure there's a good record of the points, and explain the what and why.

4) Rebuttal To Claim That PCA Is Not A Linear Method

This example is particularly interesting because it is about Principle Component Analysis(PCA), which is related to PageRank (something many SEOs are familiar with). In PCA one finds principal components, which are eigenvectors. PageRank is also an eigenvector. But I am digressing, let's discuss Garcia's claim.

After Dr. E. Garcia criticized a third party for using Pearson's Correlation because Pearson's only shows linear correlations, he criticized us for not using PCA. Like Pearson's, PCA can only find linear correlations, so I pointed out his contradiction:

Ben: "Given the top of your post criticizes someone else for using Pearson’s because of linearity issues, isn’t it kinda odd to suggest another linear method?"

To which E. Garcia has respond: "Ben’s comments about... PCA confirms an incorrect knowledge about statistics" and "Be careful when you, Ben and Rand, talk about linearity in connection with PCA as no assumption needs to be made in PCA about the distribution of the original data. I doubt you guys know about PCA...The linearity assumption is with the basis vectors."

But before we get to the core of the disagreement, let me point out that E. Garcia is close to correct with his actual statement. PCA defines basis vectors such that they are linearly de-correlated, so it does not need to assume that they will be. But this a minor quibble. This issue with Dr. E. Garcia's his position is the implication that the linear aspect of PCA is not in the correlations it finds in the source data like I claimed, but only in the basis vectors.

So, there is the disagreement - analogous to how Pearson's Correlation only finds linear correlations, does PCA also only find linear correlations? Dr. E. Garcia says no. SEOmoz, and many academic publications, say yes. For instance:

"PCA does not take into account nonlinear correlations among the features" ("Kernel PCA for HMM-Based Cursive Handwriting Recognition"; Andreas Fischer and Horst Bunke 2009)

"PCA identifies only linear correlations between variables" ("Nonlinear Principal Component Analysis Using Autoassociative Neural Networks"; Mark A. Kramer (MIT), AIChE Journal 1991)

However, besides citing authorities, let's consider why his claim is incorrect. As E. Garcia imprecisely notes, the basis vectors are linearily de-correlated. As the sources he cites points out, PCA tries to represent the source data as linear combinations of these basis vectors. This is how PCA shows us correlations - by creating basis vectors that can be linearly combined to get close to the original data. We can then look at these basis vectors and see how aspects of our source data vary together, but because it only is combining them linearly, it is only showing us linear correlations. Therefore, PCA is used to provide an insight into linear correlations -- even for non-linear data.

5) Rebuttal To Claim About Small Correlations Not Being Published

Ted Dzubia suggests that small correlations are not interesting, or at least are not interesting because our dataset is too small. He writes:

Dzubia: "out of all the factors they measured ranking correlation for, nothing was correlated above .35. In most science, correlations this low are not even worth publishing. "

Academic papers frequently publish correlations of this size. On the first page of a google scholar search for "mean correlation coefficient" I see:

- The Stanford neurology paper I cited above to refute Garcia is reporting a mean correlation coefficient of 0.12.

- "Meta-analysis of the relationship between congruence and well-being measures" a paper with over 200 citations whose abstract cites coefficients of 0.06, 0.15, 0.21, and 0.31.

- "Do amphibians follow Bergmann's rule" which notes that "grand mean correlation coefficient is significantly positive (+0.31)."

These papers were not cherry picked from a large number of papers. Contrary to Ted Dzubia's suggestion, the size of a correlation that is interesting varies considerably with the problem. For our problem, looking at correlations in Google results, one would not expect any single high correlation value from features we were looking at unless one believes Google has a single factor they predominately use to rank results with and one is only interested in that factor. We do not believe that. Google has stated on many occasions that they employ more than 200 features in their ranking algorithm. In our opinion, this makes correlations in the 0.1 - 0.35 range quite interesting.

6) Rebuttal To Claim That Small Correlations Need A Bigger Sample Size

Dzubia: "Also notice that the most negative correlation metric they found was -.18.... Such a small correlation on such a small data set, again, is not even worth publishing."

Our dataset was over 100,000 results across over 11,000 queries, which is much more than sufficient for the size of correlations we found. The risk when having small correlations and a small dataset is that it may be hard to tell if correlations are statistical noise. Generally 1.96 standard deviations is required to consider results statistically significant. For the particular correlation Dzubia brings up, one can see from the standard error value that we have 52 standard deviations of confidence the correlation is statistically significant. 52 is substantially more than the 1.96 that is generally considered necessary.

We use a sample size so much larger than usual because we wanted to make sure the relative differences between correlation coefficients were not misleading. Although we feel this adds value to our results, it is beyond what is generally considered necessary to publish correlation results.

Conclusions

Some folks inside the SEO community have had disagreements about our interpretations and opinions regarding what the data means (and where/whether confounding variables exist to explain some points). As Rand carefully noted in our post on correlation data and his presentation, we certainly want to encourage this. Our opinions about where/why the data exists are just that - opinions - and shouldn't be ascribed any value beyond its use in applying to your own thinking about the data sources. Our goal was to collect data and publish it so that our peers in the industry could review and interpret.

It is also healthy to have a vigorous debate about how statistics such as these are best computed, and how we can ensure accuracy of reported results. As our community is just starting to compute these statistics (Sean Weigold Ferguson, for example, recently submitted a post on PageRank using very similar methodologies), it is only natural there will be some bumbling back and forth as we develop industry best practices. This is healthy and to our industry's advantage that it occur.

The SEO community is the target of a lot of ad hominem attacks which try to associate all SEOs with the behavior of the worst. Although we can answer such attacks by pointing out great SEOs and great conferences, it is exciting that we've been able to elevate some attacks to include mathematical points, because when they are arguing math they can be definitively rebutted. On the six points of mathematical disagreement, the tally is pretty clear - SEO community: Six, SEO bashers: zero. Being SEOs doesn't make us infallible, so surely in the future the tally will not be so lopsided, but our tally today reflects how seriously we take our work and how we as a community can feel good about using data from this type of research to learn more about the operations of search engines.

Do you like this post? Yes No

Using this definition, let’s consider how best to ensure a quality user experience from start to finish. The user experience has to start with empathy for what the user would wish to encounter in your digital business profile. More and more digital customers want the same effort for an experience placed into digital media as they would expect for arriving at your business in person. For too many years, the marketing method of welcoming users to a site was to simply inundate them with data and articulate information. While both are important, the same can be garnered using a billboard. Instead, we need to consider how to welcome them to our sites, even if we are ranking the top for a keyword. (what is the point of ranking the highest if you also have the highest bounce rate?).

So, we start with empathy:

Why did this customer search this term?

Choosing keywords needs to be user oriented. For too many years, marketing director and SEO gurus have chosen keywords solely based on sales and conversions with little regard to additional reasons a customer may come to their site, as a result, the market has been marginalized when it comes to keyword targeting.

Users are not completely satisfied with Search Engine Results now due to the mixed bag of outcomes in the results. Much of this is the fault of our SEO Strategies that have pushed businesses to the tops of search results by providing the best answer in text, even when they may not be the “best answer” in reality. This has caused the modern consumer to become less and less impressed by search engine placement. Don’t get me wrong, local services and immediate need sales are still in vital need of top placement in the search engines, but the non-immediate purchases are becoming more and more thought based.

Local searches for plumbers will certainly still benefit from the top listing in organic and map, but what about for their non-emergency sales? The searching customers not requiring an impulse purchase are becoming more reliant on user investigation and “purchase assurance” than just the need to address the issue in the easiest way possible.

As a society, we are becoming more diligent about the items we individually care most for, and as a result, our marketing methods have to shift to accommodate for digital narcissism. We are obsessed with the desire to garner the attention of our fellows and become recognized as individual “truth givers” and “knowledgeable people” to our fellows.

People inherently work for the acceptance of others, and with the advent of the digital age, people have begun basing self-worth and assessment on how others view their ability to be knowledgeable resources of data and information. As a result, you should start by considering what the individual will be looking for to satisfy that internal need and proceed with a marketing strategy that incorporates this knowledge.

Google coined the term the “0” Moment marketing Truth, I would go to say that this is the precursor to it. Empathizing and breaking down the average user to his core understanding has to come first. Doing so allows us to choose keywords based off of what the user actually wants in the way of an experience, not just what we wish to sell them.

There are many deeper aspects to this line of thought. Next, we will discuss why finding the customer/visitors interests before they do is a vital use of this empathy and the next step in your User Experience Based marketing strategy.

Using this definition, let’s consider how best to ensure a quality user experience from start to finish. The user experience has to start with empathy for what the user would wish to encounter in your digital business profile. More and more digital customers want the same effort for an experience placed into digital media as they would expect for arriving at your business in person. For too many years, the marketing method of welcoming users to a site was to simply inundate them with data and articulate information. While both are important, the same can be garnered using a billboard. Instead, we need to consider how to welcome them to our sites, even if we are ranking the top for a keyword. (what is the point of ranking the highest if you also have the highest bounce rate?).

So, we start with empathy:

Why did this customer search this term?

Choosing keywords needs to be user oriented. For too many years, marketing director and SEO gurus have chosen keywords solely based on sales and conversions with little regard to additional reasons a customer may come to their site, as a result, the market has been marginalized when it comes to keyword targeting.

Users are not completely satisfied with Search Engine Results now due to the mixed bag of outcomes in the results. Much of this is the fault of our SEO Strategies that have pushed businesses to the tops of search results by providing the best answer in text, even when they may not be the “best answer” in reality. This has caused the modern consumer to become less and less impressed by search engine placement. Don’t get me wrong, local services and immediate need sales are still in vital need of top placement in the search engines, but the non-immediate purchases are becoming more and more thought based.

Local searches for plumbers will certainly still benefit from the top listing in organic and map, but what about for their non-emergency sales? The searching customers not requiring an impulse purchase are becoming more reliant on user investigation and “purchase assurance” than just the need to address the issue in the easiest way possible.

As a society, we are becoming more diligent about the items we individually care most for, and as a result, our marketing methods have to shift to accommodate for digital narcissism. We are obsessed with the desire to garner the attention of our fellows and become recognized as individual “truth givers” and “knowledgeable people” to our fellows.

People inherently work for the acceptance of others, and with the advent of the digital age, people have begun basing self-worth and assessment on how others view their ability to be knowledgeable resources of data and information. As a result, you should start by considering what the individual will be looking for to satisfy that internal need and proceed with a marketing strategy that incorporates this knowledge.

Google coined the term the “0” Moment marketing Truth, I would go to say that this is the precursor to it. Empathizing and breaking down the average user to his core understanding has to come first. Doing so allows us to choose keywords based off of what the user actually wants in the way of an experience, not just what we wish to sell them.

There are many deeper aspects to this line of thought. Next, we will discuss why finding the customer/visitors interests before they do is a vital use of this empathy and the next step in your User Experience Based marketing strategy.

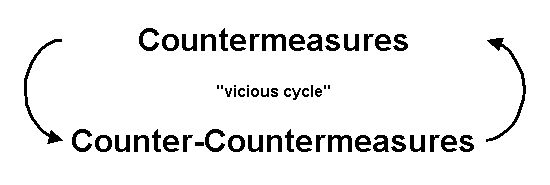

In some cases, this serves a very legitimate purpose, like incentivizing positive reviews and ratings from clients to overcome someone who griped about not getting his water and hot bread fast enough when entering a restaurant. There are certainly legitimate cases like this for businesses to present a positive image of themselves to the public. There is a duality of this topic as there are those who purposefully mislead the public with positive or negative spam of business content, causing a countermeasure to any possible chance of receiving accurate information. While this might seem like a trifle act, it damages the ability to have a reasonable expectation of receiving correct and verifiable data in search results.

"Reputation Management Scams"

In some cases, this serves a very legitimate purpose, like incentivizing positive reviews and ratings from clients to overcome someone who griped about not getting his water and hot bread fast enough when entering a restaurant. There are certainly legitimate cases like this for businesses to present a positive image of themselves to the public. There is a duality of this topic as there are those who purposefully mislead the public with positive or negative spam of business content, causing a countermeasure to any possible chance of receiving accurate information. While this might seem like a trifle act, it damages the ability to have a reasonable expectation of receiving correct and verifiable data in search results.

"Reputation Management Scams"

When it comes to the dirtier uses of Content Countermeasures, the "

When it comes to the dirtier uses of Content Countermeasures, the " No, I am not coming down on narratives themselves. As I said in the intro to this post, narratives are shaped by the marketer, but as soon as they are completely falsified narratives, then nothing is left but a dishonest scam being perpetrated on the reader. The petty marketers who believe that there is a magical line of lies they can hover on and still have their integrity intact are some of the most genuine and shining examples of cognitive dissonance available.

SEO Assassins

These are the lowest bottom-feeders of he internet. They include "Yelpers", "Competing Reviewers", and all the others willing to destroy. Content Assassins, or SEO Assassins are often written off as competitors or disgruntled employees, but we've found more instances lately of little to no association being the culprit. The internet gives strength to those who wish to do damage to others with impunity.

No, I am not coming down on narratives themselves. As I said in the intro to this post, narratives are shaped by the marketer, but as soon as they are completely falsified narratives, then nothing is left but a dishonest scam being perpetrated on the reader. The petty marketers who believe that there is a magical line of lies they can hover on and still have their integrity intact are some of the most genuine and shining examples of cognitive dissonance available.

SEO Assassins

These are the lowest bottom-feeders of he internet. They include "Yelpers", "Competing Reviewers", and all the others willing to destroy. Content Assassins, or SEO Assassins are often written off as competitors or disgruntled employees, but we've found more instances lately of little to no association being the culprit. The internet gives strength to those who wish to do damage to others with impunity.

A restaurant client of ours apparently slighted a patron by serving his therapist. The individual saw this as a slight against him, and went on to commit a fake review and spam campaign to destroy the restaurant's reputation. Using a photoshopped comment image, the spammer made it appear that the restaurant (run by a gay man) had made anti-gay statements to him on Facebook. This one fake image, posted in several LGBT social media groups, caused over 500 negative reviews in one night. By the time the restaurant came to us, we had over 900 review accounts to send individual requests and explanations to.

A restaurant client of ours apparently slighted a patron by serving his therapist. The individual saw this as a slight against him, and went on to commit a fake review and spam campaign to destroy the restaurant's reputation. Using a photoshopped comment image, the spammer made it appear that the restaurant (run by a gay man) had made anti-gay statements to him on Facebook. This one fake image, posted in several LGBT social media groups, caused over 500 negative reviews in one night. By the time the restaurant came to us, we had over 900 review accounts to send individual requests and explanations to.